Complete Features

These features were completed when this image was assembled

- Feature complete including (next line)

- OVN-K

Goal

Improve the kubevirt-csi storage plugin features and integration as we make progress towards the GA of a KubeVirt provider for HyperShift.

User Stories

- "As a hypershift user,

I want infra cluster StorageClasses made available to guest clusters,

so that guest clusters can have persistent storage available."

Infra storage classes made available to guest clusters must support:

- RWX AccessMode

- Filesystem and Block VolumeModes

Non-Requirements

- VolumeSnapshots

- CSI Clone

- Volume Expansion

Notes

- Any additional details or decisions made/needed

Done Checklist

| Who | What | Reference |

|---|---|---|

| DEV | Upstream roadmap issue (or individual upstream PRs) | <link to GitHub Issue> |

| DEV | Upstream documentation merged | <link to meaningful PR> |

| DEV | gap doc updated | <name sheet and cell> |

| DEV | Upgrade consideration | <link to upgrade-related test or design doc> |

| DEV | CEE/PX summary presentation | label epic with cee-training and add a <link to your support-facing preso> |

| QE | Test plans in Polarion | <link or reference to Polarion> |

| QE | Automated tests merged | <link or reference to automated tests> |

| DOC | Downstream documentation merged | <link to meaningful PR> |

kubevirt-csi should support/apply fsGroup settings in filesystems created by kubevirt-csi

Based on the perf scale team's results, enabling multiqueue when jumbo frames (MTU >=9000) can greatly improve throughput. as see by comparing slides 8 and 10 in this slide deck, https://docs.google.com/presentation/d/1cIm4EcAswVDpuDp-eHVmbB7VodZqQzTYCnx4HCfI9n4/edit#slide=id.g2563dda6aa5_1_68

However enabling multiqueue with small MTU causes "throughput to crater".

This task involves adding an API option to the kubevirt platform within the nodepool api, as well as adding a cli option for enabling multiqueue in the hcp cli (new productized cli)

The HyperShift KubeVirt platform only supports guest clusters running 4.14 or greater (due to the kubevirt rhcos image only being delivered in 4.14)

and it also only supports OCP 4.14 and CNV 4.14 for the infra cluster.

Add backend validation on the HostedCluster that validates the parameters are correct before processing the hosted cluster. If these conditions are not met, then report back the error as a condition on the hosted cluster CR

Problem Alignment

The Problem

Many customers still predominantly use logs as a main source to capture data that's important to quickly identify problems. Many issues can also be identified by metrics but there are some events in security, such as suspicious IP address activity, or runtime system issues such as host errors, where logs are your friend. OpenShift currently only support defining alerting rules and get notification based on metrics. That leaves a big gap to help identifying and being notified for the previous mentioned events immediately.

High-Level Approach

As we move the Logging stack towards using Loki (see OBSDA-7), we will be able to use it's out-of-the-box capabilities to define alerting rules on logs using LogQL. That approach is very similar to Prometheus' alerting ecosystem and actually gives us the opportunity to reuse Prometheus' Alertmanager to distribute alerts/notifications. For customers, this means they do not need to configure different channels twice, for metrics and logs, but reuse the same configuration.

For the configuration itself, we need to look into introducing a CRD (similar to the PrometheusRule CRD inside the Prometheus Operator) to allow users with non-admin permissions to configure the rules without changing the central Loki configuration.

Goal & Success

- Allow individual users to configure alerting rules based on patterns inside a log record.

Solution Alignment

Key Capabilities

- As an Application SRE, I'd like to configure SLIs to get alerted when the number of messages that meet some criteria (e.g. errors) exceeds a particular threshold.

- As an Application SRE, I'd like to configure where alerts will be send so that I get notified on the right channels.

Key Flows

Open Questions & Key Decisions (optional)

- Do we provide integration into Prometheus Alertmanager only and if so, how?

- Note: We could integrate into our Monitoring's Alertmanager automatically but what happens if a customer decided to use an external Alertmanager and configures that inside Monitoring. I think we need to discuss this with the Monitoring team and identify if we actually want a more centralized approach to Alerting as supposed to divide into Metrics and Logs with each some dedicated instance. I think that's another perfect use case for why Observatorium would be better to use in general in the future. It combines Metrics and Log stack into one single deployment and we could only expose a single Alertmanager and a configuration for pointing Prometheus and Loki to an external instance if necessary.

Goals

- Enable OpenShift Application owners to create alerting rules based on logs scoped only for applications they have access to.

- Provide support for notifying on firing alerts in OpenShift Console

Non-Goals

- Provide support for logs-based metrics that can be used in PrometheusRule custom resources for alerting.

Motivation

Since OpenShift 4.6, application owners can configure alerting rules based on metrics themselves as described in User Workload Monitoring (UWM) enhancement. The rules are defined as PrometheusRule resources and can be based on platform and/or application metrics.

To expand the alerting capabilities on logs as an observability signal, cluster admins and application owners should be able to configure alerting rules as described in the Loki Rules docs and in the Loki Operator Ruler upstream enhancement.

AlertingRule CRD fullfills the requirement to define alerting rules for Loki similar to PrometheusRule.

RulerConfig CRD fullfills the requirement to connect the Loki Ruler component to notify a list of Prometheus AlertManager hosts on firing alerts.

Alternatives

- Use only the RecordingRule CRD to export logs as metrics first and rely on present cluster-monitoring/user-workload-monitoring alerting capabilities.

Acceptance Criteria

- OpenShift Application owners receive notifications for application logs-based alerts on the same OpenShift Console Alerts view as with metrics.

Risk and Assumptions

- Assuming that the present OpenShift Console implementation for Alerts view is compatible to list and manage alerts from Alertmanager which originate from Loki.

- Assuming that the present UWM tenancy model applies to the logs-based alerts.

Documentation Considerations

Open Questions

Additional Notes

- Enhancement proposal: Cluster Logging: Logs-Based Alerts

Feature Overview (aka. Goal Summary)

An elevator pitch (value statement) that describes the Feature in a clear, concise way. Complete during New status.

Goals (aka. expected user outcomes)

The observable functionality that the user now has as a result of receiving this feature. Complete during New status.

Requirements (aka. Acceptance Criteria):

A list of specific needs or objectives that a feature must deliver in order to be considered complete. Be sure to include nonfunctional requirements such as security, reliability, performance, maintainability, scalability, usability, etc. Initial completion during Refinement status.

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. Initial completion during Refinement status.

Interoperability Considerations

Which other projects and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

Design Doc:

Design Doc:

https://docs.google.com/document/d/1m6OYdz696vg1v8591v0Ao0_r_iqgsWjjM2UjcR_tIrM/

Problem:

Goal

As a developer, I want to be able to test my serverless function after it's been deployed.

Why is it important?

Use cases:

- As a developer, I want to test my serverless function

Acceptance criteria:

- This features needs to work in ACM (Multi cluster environment when console is being run on the Hub cluster)

Dependencies (External/Internal):

Please add a spike to see if there are dependencies.

Design Artifacts:

Exploration:

Developers can use the the kn func invoke CLI to accomplish this. According to Naina, there is an API, but it's in Go.

Note:

Description

As a user, I want to invoke a Serverless function from the developer console. This action should be available as a page and as a modal.

Acceptance Criteria

- A backend proxy to invoke a serverless function (or a k8s service in general) from the frontend without a public route.

- The API endpoint should be only accessible to logged-in users.

- Should also work when the bridge is running off-cluster (as developers start them mostly for local development)

Additional Details:

This will be similar to the web terminal proxy, except that no auth headers will be passed to the underlying service.

We need something similar to:

POST /proxy/in-cluster

{

endpoint: string

# Or just service: string ?? tbd.

headers: Record<string, string | string[]>

body: string

timeout: number

}

Description

As a user, I want to invoke a Serverless function from the developer console. This action should be available as a page and as a modal.

This story depends on ODC-7273, ODC-7274, and ODC-7288. This story should bring the backend proxy, and the frontend together and finalize the work.

Acceptance Criteria

- Write proper types if they are missed

- Connect the form and invoke a serverless function, consume and show the response

- Unit testes

- E2E tests

Additional Details:

Description

As a user, I want to invoke a Serverless function from the developer console. This action should be available as a page and as a modal.

This story is to evaluate a good UI for this and check this with our PM (Serena) and the Serverless team (Naina and Lance).

Acceptance Criteria

- Add a new page with title "Invoke Serverless function {function-name}" and should be available via a new URL (/serverless/ns/:ns/invoke-function/:function-name/).

- Implement a form with formik to "invoke" (console.log for now) Serverless functions, without writing the network call for this already. Focus on the UI to get feedback as early as possible. Use reusable, well-named components anyway.

- The page should be also available as a modal. Add a new action to all Serverless Services with the label (tbd) to open this modal from the Topology graph or from the Serverless Service list view.

- The page should have two tabs or two panes for the request and response. Each of this tabs/panes should have again two tabs, "similar" to the browser network inspector. See below for what we know currently.

- Get confirmation from Christoph, Serena, Naina, and Lance.

- Disable the action until we implement the network communication in

ODC-7275with the serverless function. - No e2e tests are needed for this story.

Additional Details:

Information the form should show:

- Request tab shows "Body" and "Options" tab

- Body is just a full size editor. We should reuse our code editor.

- Options contains:

- Auto complete text field “Content type” with placeholder “application/json”, that will be used when nothing is entered

- Dropdown “Format” with values “cloudevent” (default) and “http”

- Text field “Type” with placeholder text “boson.fn”, that will be used when nothing is entered

- Text field “Source” with placeholder “/boson/fn”, that will be used when nothing is entered

- Response tab shows Body and Info tab

- Body is a full size editor that shows the response. We should format a JSON string with JSON.stringify(data, null, 2)

- Info contains:

- Id (id)

- Type (type)

- Source (source)

- Time (time) (formatted)

- Content-Type: (datacontenttype)

< High-Level description of the feature ie: Executive Summary >

Goals

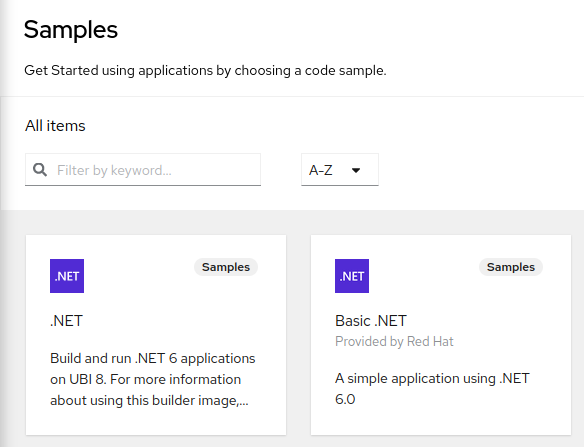

Cluster administrators need an in-product experience to discover and install new Red Hat offerings that can add high value to developer workflows.

Requirements

| Requirements | Notes | IS MVP |

| Discover new offerings in Home Dashboard | Y | |

| Access details outlining value of offerings | Y | |

| Access step-by-step guide to install offering | N | |

| Allow developers to easily find and use newly installed offerings | Y | |

| Support air-gapped clusters | Y |

< What are we making, for who, and why/what problem are we solving?>

Out of scope

Discovering solutions that are not available for installation on cluster

Dependencies

No known dependencies

Background, and strategic fit

Assumptions

None

Customer Considerations

Documentation Considerations

Quick Starts

What does success look like?

QE Contact

Impact

Related Architecture/Technical Documents

Done Checklist

- Acceptance criteria are met

- Non-functional properties of the Feature have been validated (such as performance, resource, UX, security or privacy aspects)

- User Journey automation is delivered

- Support and SRE teams are provided with enough skills to support the feature in production environment

Problem:

Developers using Dev Console need to be made aware of the RH developer tooling available to them.

Goal:

Provide awareness to developers using Dev Console of the RH developer tooling that is available to them, including:

- odo

- OpenShift IDE extension, which is available on IntelliJ & VScode

- VSCode Knative IDE extension: https://marketplace.visualstudio.com/items?itemName=redhat.vscode-knative

- IntelliJ Knative Plugin: https://plugins.jetbrains.com/plugin/16476-knative--serverless-functions-by-red-hat

- Dev spaces

Consider enhancing the +Add page and/or the Guided tour

Provide a Quick Start for installing the Cryostat Operator

Why is it important?

To increase usage of our RH portfolio

Acceptance criteria:

- Quick Start - Installing Cryostat Operator

- Quick Start - Get started with JBoss EAP using a Helm Chart

- Discoverability of the IDE extensions from Create Serverless form

- Update Terminal step of the Guided Tour to indicate that odo CLI is accessible (link to https://developers.redhat.com/products/odo/overview)

Dependencies (External/Internal):

Design Artifacts:

Exploration:

Note:

Description

Update Terminal step of the Guided Tour to indicate that odo CLI is accessible - https://developers.redhat.com/products/odo/overview

Acceptance Criteria

- Update Guided tour of Web Terminal to add odo CLI link

- On click of link user has to redirected to respective page

Additional Details:

Description

Add below IDE extensions in create serverless form,

- VSCode Knative IDE extension: https://marketplace.visualstudio.com/items?itemName=redhat.vscode-knative

- IntelliJ Knative Plugin: https://plugins.jetbrains.com/plugin/16476-knative--serverless-functions-by-red-hat

Acceptance Criteria

- In create serverless form add above IDE extensions

- On click of the link, user needs to take to respective pages

- Add e2e tests for that

Additional Details:

This issue is to handle the PR comment - https://github.com/openshift/console-operator/pull/770#pullrequestreview-1501727662 for the issue https://issues.redhat.com/browse/ODC-7292

We are deprecating DeploymentConfig with Deployment in OpenShift because Deployment is the recommended way to deploy applications. Deployment is a more flexible and powerful resource that allows you to control the deployment of your applications more precisely. DeploymentConfig is a legacy resource that is no longer necessary. We will continue to support DeploymentConfig for a period of time, but we encourage you to migrate to Deployment as soon as possible.

Here are some of the benefits of using Deployment over DeploymentConfig:

- Deployment is more flexible. You can specify the number of replicas to deploy, the image to deploy, and the environment variables to use.

- Deployment is more powerful. You can use Deployment to roll out changes to your applications in a controlled manner.

- Deployment is the recommended way to deploy applications. OpenShift will continue to improve Deployment and make it the best way to deploy applications.

We hope that you will migrate to Deployment as soon as possible. If you have any questions, please contact us.

Epic Goal

- Make it possible to disable the DeploymentConfig and BuildConfig APIs, and associated controller logic.

Given the nature of this component (embedded into a shared api server and controller manager), this will likely require adding logic within those shared components to not enable specific bits of function when the build or DeploymentConfig capability is disabled, and watching the enabled capability set so that the components enable the functionality when necessary.

I would not expect us to split the components out of their existing location as part of this, though that is theoretically an option.

Why is this important?

- Reduces resource footprint and bug surface area for clusters that do not need to utilize the DeploymentConfig or BuildConfig functionality, such as SNO and OKE.

Acceptance Criteria (Mandatory)

- CI - MUST be running successfully with tests automated (we have an existing CI job that runs a cluster with all optional capabilities disabled. Passing that job will require disabling certain deploymentconfig tests when the cap is disabled)

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- Cluster install capabilities

Previous Work (Optional):

- The optional cap architecture and guidance for adding a new capability is described here: https://github.com/openshift/enhancements/blob/master/enhancements/installer/component-selection.md

Open questions::

None

Done Checklist

- Acceptance criteria are met

- Non-functional properties of the Feature have been validated (such as performance, resource, UX, security or privacy aspects)

- User Journey automation is delivered

- Support and SRE teams are provided with enough skills to support the feature in production environment

Make the list of enabled/disable controllers in OAS reflect enabled/disabled capabilities.

Acceptance criteria:

- OAS allows to specify a list of enabled/disabled APIs (e.g. watches, caches, ...)

- OASO watches capabilities and generates the right configuration for OAS with enabled/disabled list of APIs

- Documentation is properly updated

QE:

- enabled/disable capabilities and validate a given API (DC, Builds, ...) is/is not managed by a cluster:

- checking the OAS logs do/do not log entries about affected API(s)

- DC/Builds objects are created/fail to be created

Feature Overview

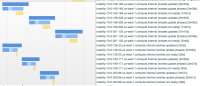

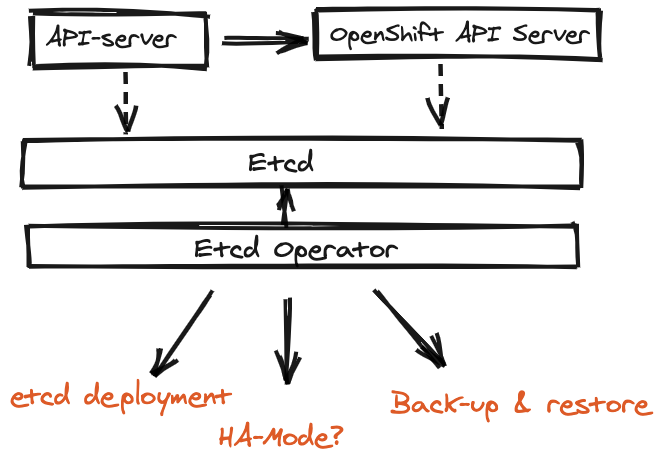

At the moment, HyperShift is relying on an older etcd operator (i.e, the CoreOS etcd operator). However, this operator is basic and does not support HA as required.

Goals

Introduce a reliable component to operate Etcd that:

- Is backed up by a stable operator

- Supports Images with a Hash

- Supprts for Backups

- Local-persistent volumes for persistent data?

- Encryption.

- HA and Scalablity.

For an initial MVP of service delivery adoption of Hypershift we need to enable support for manual cluster migration.

Additional information: https://docs.google.com/presentation/d/1JDfd34jvj_4VvVn1bNieSXRejbFqAs_g8G-5rBqTtxw/edit?usp=sharing

Following on from https://issues.redhat.com/browse/HOSTEDCP-444 we need to add the steps to enable migration of the Node/CAPI resources to enable workloads to continue running during controlplane migration.

This will be a manual process where controlplane downtime will occur.

This must satisfy a successful migration criteria:

- All HC conditions are positive.

- All NodePool conditions are positive.

- All service endpoints kas/oauth/ignition server... are reachable.

- Ability to create/scale NodePools remains operational.

We need to validate and document this manually for starters.

Eventually this should be automated in the upcoming e2e test.

We could even have a job running conformance tests over a migrated cluster

Epic Goal

As an OpenShift on vSphere administrator, I want to specify static IP assignments to my VMs.

As an OpenShift on vSphere administrator, I want to completely avoid using a DHCP server for the VMs of my OpenShift cluster.

Why is this important?

Customers want the convenience of IPI deployments for vSphere without having to use DHCP. As in bare metal, where METAL-1 added this capability, some of the reasons are the security implications of DHCP (customers report that for example depending on configuration they allow any device to get in the network). At the same time IPI deployments only require to our OpenShift installation software, while with UPI they would need automation software that in secure environments they would have to certify along with OpenShift.

Acceptance Criteria

- I can specify static IPs for node VMs at install time with IPI

Previous Work

Bare metal related work:

METAL-1- https://github.com/openshift/enhancements/blob/master/enhancements/network/baremetal-ipi-network-configuration.md

CoreOS Afterburn:

https://github.com/coreos/afterburn/blob/main/src/providers/vmware/amd64.rs#L28

https://github.com/openshift/installer/blob/master/upi/vsphere/vm/main.tf#L34

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Epic Goal

As an OpenShift on vSphere administrator, I want to specify static IP assignments to my VMs.

As an OpenShift on vSphere administrator, I want to completely avoid using a DHCP server for the VMs of my OpenShift cluster.

Why is this important?

Customers want the convenience of IPI deployments for vSphere without having to use DHCP. As in bare metal, where METAL-1 added this capability, some of the reasons are the security implications of DHCP (customers report that for example depending on configuration they allow any device to get in the network). At the same time IPI deployments only require to our OpenShift installation software, while with UPI they would need automation software that in secure environments they would have to certify along with OpenShift.

Acceptance Criteria

- I can specify static IPs for node VMs at install time with IPI

Previous Work

Bare metal related work:

METAL-1- https://github.com/openshift/enhancements/blob/master/enhancements/network/baremetal-ipi-network-configuration.md

CoreOS Afterburn:

https://github.com/coreos/afterburn/blob/main/src/providers/vmware/amd64.rs#L28

https://github.com/openshift/installer/blob/master/upi/vsphere/vm/main.tf#L34

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

{}USER STORY:{}

As an OpenShift administrator, I want to apply an IP configuration so that I can adhere to my organizations security guidelines.

{}DESCRIPTION:{}

The vSphere machine controller needs to be modified to convert nmstate to `guestinfo.afterburn.initrd.network-kargs` upon cloning the template for a new machine. An example of this is here: https://github.com/openshift/machine-api-operator/pull/1079

{}Required:{}

- PR is approved

- Associated test(s) are created in https://github.com/openshift/cluster-api-actuator-pkg

{}Nice to have:{}

{}ACCEPTANCE CRITERIA:{}

{}ENGINEERING DETAILS:{}

Feature Overview

With this feature MCE will be an additional operator ready to be enabled with the creation of clusters for both the AI SaaS and disconnected installations with Agent.

Currently 4 operators have been enabled for the Assisted Service SaaS create cluster flow: Local Storage Operator (LSO), OpenShift Virtualization (CNV), OpenShift Data Foundation (ODF), Logical Volume Manager (LVM)

The Agent-based installer doesn't leverage this framework yet.

Goals

When a user performs the creation of a new OpenShift cluster with the Assisted Installer (SaaS) or with the Agent-based installer (disconnected), provide the option to enable the multicluster engine (MCE) operator.

The cluster deployed can add itself to be managed by MCE.

Background, and strategic fit

Deploying an on-prem cluster 0 easily is a key operation for the remaining of the OpenShift infrastructure.

While MCE/ACM are strategic in the lifecycle management of OpenShift, including the provisioning of all the clusters, the first cluster where MCE/ACM are hosted, along with other supporting tools to the rest of the clusters (GitOps, Quay, log centralisation, monitoring...) must be easy and with a high success rate.

The Assisted Installer and the Agent-based installers cover this gap and must present the option to enable MCE to keep making progress in this direction.

Assumptions

MCE engineering is responsible for adding the appropriate definition as an olm-operator-plugins

See https://github.com/openshift/assisted-service/blob/master/docs/dev/olm-operator-plugins.md for more details

Epic Goal

- When an Assisted Service SaaS user performs the creation of a new OpenShift cluster, provide the option to enable the multicluster engine (MCE) operator.

- 4 operators currently have been enabled for the Assisted Service SaaS create cluster flow:

- Local Storage Operator (LSO), OpenShift Virtualization (CNV), OpenShift Data Foundation (ODF), Logical Volume Manager (LVM)

- MCE engineering is responsible for adding the appropriate definition as an olm-operator-plugins

Why is this important?

- Expose users in the Assisted Service SaaS to the value of the MCE

- Customers/users want to leverage the cluster lifecycle capabilities within MCE inside of their on premises environment.

- The 'cluster0' can be initiated from Assisted Service SaaS and include MCE hub for cluster deployment within the customer datacenter.

Automated storage configuration

- The Infrastructure Operator, a dependency of MCE to deploy bare metal, vSphere and Nutanix clusters, requires storage. There are 3 scenarios to automate storage:

- User selects to install ODF and MCE:

- ODF is the ideal storage for clusters but requires an additional subscriptions.

- When selected along with MCE it will be configured as the storage required by the Infrastructure Operator and the Infrastructure Operator will be deployed along with MCE.

- User deploys an SNO cluster, which supports LVMS as its storage and is available to all OpenShift users.

- If the user also chooses ODF then ODF is used for the Infrastructure Opertor

- If ODF isn't configured then LVMS is enabled and the Infrastructure Operator will use it.

- User doesn't install ODF or a SNO cluster

- They have to choose their storage and then install the Infrastructure Operator in day-2

Scenarios

- When a RH cloud user logs into console.redhat SaaS, they can leverage the Assisted Service SaaS flow to create a new cluster

- During the Assisted Service SaaS create flow, a RH cloud user can see a list of available operators that they want to install at the same time as the cluster create.

- An option is offered to select check a box next to "multicluster engine for Kubernetes (MCE)"

- The RH cloud user can read a tool-tip or info-box with short description of the MCE and click a link for more details to review MCE documentation

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- Ensure MCE release channel can automatically deploy the latest x.y.z without needing any DevOps/SRE intervention

- Ensure MCE release channel can be updated quickly (if not automatically) to ensure the later release x.y can be offered to the cloud user.

Dependencies (internal and external)

- ...

Previous Work (Optional):

- for example, CNV operator: https://github.com/openshift/assisted-service/blob/master/internal/operators/cnv/manifest.go#L165

Open questions:

- Is there any automation that will pickup the next stable-x.y MCE or do we need to manually do it with each release? For example, when MCE 2.2 comes out do we need to update the SaaS plugin code or does it automatically move to the next. Note for example how the OLM subscription looks - and stable-2.2 will appear once MCE 2.2 comes out.

- How challenging is this to maintain as new OCP releases come out and QE must be performed?

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Authentication-operator ignores noproxy settings defined in the cluster-wide proxy.

Expected outcome: When noproxy is set, Authentication operator should initialize connections through ingress instead of the cluster-wide proxy.

Background and Goal

Currently in OpenShift we do not support adding 3rd party agents and other software to cluster nodes. While rpm-ostree supports adding packages, we have no way today to do that in a sane, scalable way across machineconfigpools and clusters. Some customers may not be able to meet their IT policies due to this.

In addition to third party content, some customers may want to use the layering process as a point to inject configuration. The build process allows for simple copying of config files and the ability to run arbitrary scripts to set user config files (e.g. through an Ansible playbook). This should be a supported use case, except where it conflicts with OpenShift (for example, the MCO must continue to manage Cri-O and Kubelet configs).

Example Use Cases

- Bare metal firmware update software that is packaged as an RPM

- Host security monitors

- Forensic tools

- SEIM logging agents

- SSH Key management

- Device Drivers from OEM/ODM partners

Acceptance Criteria

- Administrators can deploy 3rd party repositories and packages to MachineConfigPools.

- Administrators can easily remove added packages and repository files.

- Administrators can manage system configuration files by copying files into the RHCOS build. [Note: if the same file is managed by the MCO, the MachineConfig version of the file is expected to "win" over the OS image version.]

Background

As part of enabling OCP CoreOS Layering for third party components, we will need to allow for package installation to /opt. Many OEMs and ISVs install to /opt and it would be difficult for them to make the change only for RHCOS. Meanwhile changing their RHEL target to a different target would also be problematic as their customers are expecting these tools to install in a certain way. Not having to worry about this path will provide the best ecosystem partner and customer experience.

Requirements

- Document how 3rd party vendors can be compatible with our current offering.

- Provide mechanism for 3rd party vendors or their customers to provide information for exceptions that require an RPM to install binaries to /opt as an install target path.

e2e test in our ci to override kernel

Possibly repurpose https://github.com/openshift/os/tree/master/tests/layering

Feature Overview (aka. Goal Summary)

Add support for custom security groups to be attached to control plane and compute nodes at installation time.

Goals (aka. expected user outcomes)

Allow the user to provide existing security groups to be attached to the control plane and compute node instances at installation time.

Requirements (aka. Acceptance Criteria):

The user will be able to provide a list of existing security groups to the install config manifest that will be used as additional custom security groups to be attached to the control plane and compute node instances at installation time.

Out of Scope

The installer won't be responsible of creating any custom security groups, these must be created by the user before the installation starts.

Background

We do have users/customers with specific requirements on adding additional network rules to every instance created in AWS. For OpenShift these additional rules need to be added on day-2 manually as the Installer doesn't provide the ability to add custom security groups to be attached to any instance at install time.

MachineSets already support adding a list of existing custom security groups, so this could be automated already at install time manually editing each MachineSet manifest before starting the installation, but even for these cases the Installer doesn't allow the user to provide this information to add the list of these security groups to the MachineSet manifests.

Documentation Considerations

Documentation will be required to explain how this information needs to be provided to the install config manifest as any other supported field.

Epic Goal

- Allow the user to provide existing security groups to be attached to the control plane and compute node instances at installation time.

Why is this important?

- We do have users/customers with specific requirements on adding additional network rules to every instance created in AWS. For OpenShift these additional rules need to be added on day-2 manually as the Installer doesn't provide the ability to add custom security groups to be attached to any instance at install time.

MachineSets already support adding a list of existing custom security groups, so this could be automated already at install time manually editing each MachineSet manifest before starting the installation, but even for these cases the Installer doesn't allow the user to provide this information to add the list of these security groups to the MachineSet manifests.

Scenarios

- The user will be able to provide a list of existing security groups to the install config that will be used as additional custom security groups to be attached to the control plane and compute node instances at installation time.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

Previous Work (Optional):

- Compute Nodes managed by MAPI already support this feature

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

User Story:

As a (user persona), I want to be able to:

- Add custom security groups for compute nodes

- Add custom security groups for control plane nodes

so that I can achieve

- Control Plane and Compute nodes can support operational specific security rules. For instance: specific traffic may be required for compute vs control plane nodes.

Acceptance Criteria:

Description of criteria:

- The control plane and compute machine sections of the install config accept user input as additionalSecurityGroupIDs (when using the aws platform).

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

-

additionalSecurityGroupIDs: description: AdditionalSecurityGroupIDs contains IDs of additional security groups for machines, where each ID is presented in the format sg-xxxx. items: type: string type: array

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

Feature Overview (aka. Goal Summary)

Scaling of pod in Openshift highly depends on customer workload and their hardware setup . Some workloads on certain hardware might not scale beyond 100 pods and others might scale to 1000 pods .

As a openshift admin i want to monitor metrics that will indicate why i am not able to scale my pods . think of pressure gauge that will tell customer when its green ( can scale) when its red ( not scale)

As a openshift support team if a customer call in with their complain about pod scaling then i should be able to check some metrics and inform them why they are not able to scale

Goals (aka. expected user outcomes)

Metrics and alert and dashboard

Requirements (aka. Acceptance Criteria):

able to integrate these metrics and alert in a monitoring dashboard

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

<--- Cut-n-Paste the entire contents of this description into your new Epic --->

Epic Goal

- To come up with set of metrics that indicate optimal node resource usage.

Why is this important?

- These metrics will help customers to understand the capacity they have instead of restricting themselves to hard coded max pod limit.

Scenarios

- As a owner of extremely high capacity machine, I want to be able to deploy as many pods as my machine can handle.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- None

Previous Work (Optional):

Open questions::

- The challenging part is come up with set of metrics that accurately indicate system resource usage.

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

We need to have an operator to inject dashboard jsonnet. E.g. etcd team injects their dashboard jsonnet using their operator in the form of a config map.

We will need similar approach for node dashboard.

In this feature will follow up OCPBU-186 Image mirroring by tags.

OCPBU-186 implemented new API ImageDigestMirrorSet and ImageTagMirrorSet and rolling of them through MCO.

This feature will update the components using ImageContentSourcePolicy to use ImageDigestMirrorSet.

The list of the components: https://docs.google.com/document/d/11FJPpIYAQLj5EcYiJtbi_bNkAcJa2hCLV63WvoDsrcQ/edit?usp=sharing.

Migrate OpenShift Components to use the new Image Digest Mirror Set (IDMS)

This doc list openshift components currently use ICSP: https://docs.google.com/document/d/11FJPpIYAQLj5EcYiJtbi_bNkAcJa2hCLV63WvoDsrcQ/edit?usp=sharing

Plan for ImageDigestMirrorSet Rollout :

Epic: https://issues.redhat.com/browse/OCPNODE-521

4.13: Enable ImageDigestMirrorSet, both ICSP and ImageDigestMirrorSet objects are functional

- Document that ICSP is being deprecated and will be unsupported by 4.17 (to allow for EUS to EUS upgrades)

- Reject write to both ICSP and ImageDigestMirrorSet on the same cluster

4.14: Update OpenShift components to use IDMS

4.17: Remove support for ICSP within MCO

- Error out if an old ICSP object is used

As an openshift developer, I want --idms-file flag so that I can fetch image info from alternative mirror if --icsp-file gets deprecated.

As a <openshift developer> trying to <mirror image for disconnect environment using oc command> I want <the output give the example of ImageDigestMirrorSet manifest> because ImageContentSourcePolicy will be replaced by CRD implemented in OCPBU-186 Image mirroring by tags

the ImageContentSourcePolicy manifest snippet from the command output will be updated to ImageDigestMirrorSet manifest.{}

workloads uses `oc adm release mirror` command will be impacted.

Feature Overview

Create a GCP cloud specific spec.resourceTags entry in the infrastructure CRD. This should create and update tags (or labels in GCP) on any openshift cloud resource that we create and manage. The behaviour should also tag existing resources that do not have the tags yet and once the tags in the infrastructure CRD are changed all the resources should be updated accordingly.

Tag deletes continue to be out of scope, as the customer can still have custom tags applied to the resources that we do not want to delete.

Due to the ongoing intree/out of tree split on the cloud and CSI providers, this should not apply to clusters with intree providers (!= "external").

Once confident we have all components updated, we should introduce an end2end test that makes sure we never create resources that are untagged.

Goals

- Functionality on GCP Tech Preview

- inclusion in the cluster backups

- flexibility of changing tags during cluster lifetime, without recreating the whole cluster

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

|---|---|---|

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

List any affected packages or components.

- Installer

- Cluster Infrastructure

- Storage

- Node

- NetworkEdge

- Internal Registry

- CCO

This epic covers the work to apply user defined labels GCP resources created for openshift cluster available as tech preview.

The user should be able to define GCP labels to be applied on the resources created during cluster creation by the installer and other operators which manages the specific resources. The user will be able to define the required tags/labels in the install-config.yaml while preparing with the user inputs for cluster creation, which will then be made available in the status sub-resource of Infrastructure custom resource which cannot be edited but will be available for user reference and will be used by the in-cluster operators for labeling when the resources are created.

Updating/deleting of labels added during cluster creation or adding new labels as Day-2 operation is out of scope of this epic.

List any affected packages or components.

- Installer

- Cluster Infrastructure

- Storage

- Node

- NetworkEdge

- Internal Registry

- CCO

Reference - https://issues.redhat.com/browse/RFE-2017

Enhancement proposed for GCP labels support in OCP, requires machine-api-provider-gcp to add azure userLabels available in the status sub resource of infrastructure CR, to the gcp virtual machines resource and the sub-resources created.

Acceptance Criteria

- Code linting, validation and best practices adhered to

- UTs and e2e are added/updated

Enhancement proposed for Azure tags support in OCP, requires install-config CRD to be updated to include gcp userLabels for user to configure, which will be referred by the installer to apply the list of labels on each resource created by it and as well make it available in the Infrastructure CR created.

Below is the snippet of the change required in the CRD

apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition metadata: name: installconfigs.install.openshift.io spec: versions: - name: v1 schema: openAPIV3Schema: properties: platform: properties: gcp: properties: userLabels: additionalProperties: type: string description: UserLabels additional keys and values that the installer will add as labels to all resources that it creates. Resources created by the cluster itself may not include these labels. type: object

This change is required for testing the changes of the feature, and should ideally get merged first.

Acceptance Criteria

- Code linting, validation and best practices adhered to

- User should be able to configure gcp user defined labels in the install-config.yaml

- Fields descriptions

Enhancement proposed for GCP labels and tags support in OCP requires making use of latest APIs made available in terraform provider for google and requires an update to use the same.

Acceptance Criteria

- Code linting, validation and best practices adhered to.

Installer generates Infrastructure CR in manifests creation step of cluster creation process based on the user provided input recorded in install-config.yaml. While generating Infrastructure CR platformStatus.gcp.resourceLabels should be updated with the user provided labels(installconfig.platform.gcp.userLabels).

Acceptance Criteria

- Code linting, validation and best practices adhered to

- Infrastructure CR created by installer should have gcp user defined labels if any, in status field.

cluster-config-operator makes Infrastructure CRD available for installer, which is included in it's container image from the openshift/api package and requires the package to be updated to have the latest CRD.

Enhancement proposed for GCP tags support in OCP, requires cluster-image-registry-operator to add gcp userTags available in the status sub resource of infrastructure CR, to the gcp storage resource created.

cluster-image-registry-operator uses the method createStorageAccount() to create storage resource which should be updated to add tags after resource creation.

Acceptance Criteria

- Code linting, validation and best practices adhered to

- UTs and e2e are added/updated

Installer creates below list of gcp resources during create cluster phase and these resources should be applied with the user defined labels and the default OCP label kubernetes-io-cluster-<cluster_id>:owned

Resources List

| Resource | Terraform API |

|---|---|

| VM Instance | google_compute_instance |

| Image | google_compute_image |

| Address | google_compute_address(beta) |

| ForwardingRule | google_compute_forwarding_rule(beta) |

| Zones | google_dns_managed_zone |

| Storage Bucket | google_storage_bucket |

Acceptance Criteria:

- Code linting, validation and best practices adhered to

- List of gcp resources created by installer should have user defined labels and as well as the default OCP label.

Enhancement proposed for GCP labels support in OCP, requires cluster-image-registry-operator to add gcp userLabels available in the status sub resource of infrastructure CR, to the gcp storage resource created.

cluster-image-registry-operator uses the method createStorageAccount() to create storage resource which should be updated to add labels.

Acceptance Criteria

- Code linting, validation and best practices adhered to

- UTs and e2e are added/updated

Feature Overview

Much like core OpenShift operators, a standardized flow exists for OLM-managed operators to interact with the cluster in a specific way to leverage AWS STS authorization when using AWS APIs as opposed to insecure static, long-lived credentials. OLM-managed operators can implement integration with the CloudCredentialOperator in well-defined way to support this flow.

Goals:

Enable customers to easily leverage OpenShift's capabilities around AWS STS with layered products, for increased security posture. Enable OLM-managed operators to implement support for this in well-defined pattern.

Requirements:

- CCO gets a new mode in which it can reconcile STS credential request for OLM-managed operators

- A standardized flow is leveraged to guide users in discovering and preparing their AWS IAM policies and roles with permissions that are required for OLM-managed operators

- A standardized flow is defined in which users can configure OLM-managed operators to leverage AWS STS

- An example operator is used to demonstrate the end2end functionality

- Clear instructions and documentation for operator development teams to implement the required interaction with the CloudCredentialOperator to support this flow

Use Cases:

See Operators & STS slide deck.

Out of Scope:

- handling OLM-managed operator updates in which AWS IAM permission requirements might change from one version to another (which requires user awareness and intervention)

Background:

The CloudCredentialsOperator already provides a powerful API for OpenShift's cluster core operator to request credentials and acquire them via short-lived tokens. This capability should be expanded to OLM-managed operators, specifically to Red Hat layered products that interact with AWS APIs. The process today is cumbersome to none-existent based on the operator in question and seen as an adoption blocker of OpenShift on AWS.

Customer Considerations

This is particularly important for ROSA customers. Customers are expected to be asked to pre-create the required IAM roles outside of OpenShift, which is deemed acceptable.

Documentation Considerations

- Internal documentation needs to exists to guide Red Hat operator developer teams on the requirements and proposed implementation of integration with CCO and the proposed flow

- External documentation needs to exist to guide users on:

- how to become aware that the cluster is in STS mode

- how to become aware of operators that support STS and the proposed CCO flow

- how to become aware of the IAM permissions requirements of these operators

- how to configure an operator in the proposed flow to interact with CCO

Interoperability Considerations

- this needs to work with ROSA

- this needs to work with self-managed OCP on AWS

Market Problem

This Section: High-Level description of the Market Problem ie: Executive Summary

- As a customer of OpenShift layered products, I need to be able to fluidly, reliably and consistently install and use OpenShift layered product Kubernetes Operators into my ROSA STS clusters, while keeping a STS workflow throughout.

- As a customer of OpenShift on the big cloud providers, overall I expect OpenShift as a platform to function equally well with tokenized cloud auth as it does with "mint-mode" IAM credentials. I expect the same from the Kubernetes Operators under the Red Hat brand (that need to reach cloud APIs) in that tokenized workflows are equally integrated and workable as with "mint-mode" IAM credentials.

- As the managed services, including Hypershift teams, offering a downstream opinionated, supported and managed lifecycle of OpenShift (in the forms of ROSA, ARO, OSD on GCP, Hypershift, etc), the OpenShift platform should have as close as possible, native integration with core platform operators when clusters use tokenized cloud auth, driving the use of layered products.

- .

- As the Hypershift team, where the only credential mode for clusters/customers is STS (on AWS) , the Red Hat branded Operators that must reach the AWS API, should be enabled to work with STS credentials in a consistent, and automated fashion that allows customer to use those operators as easily as possible, driving the use of layered products.

Why it Matters

- Adding consistent, automated layered product integrations to OpenShift would provide great added value to OpenShift as a platform, and its downstream offerings in Managed Cloud Services and related offerings.

- Enabling Kuberenetes Operators (at first, Red Hat ones) on OpenShift for the "big3" cloud providers is a key differentiation and security requirement that our customers have been and continue to demand.

- HyperShift is an STS-only architecture, which means that if our layered offerings via Operators cannot easily work with STS, then it would be blocking us from our broad product adoption goals.

Illustrative User Stories or Scenarios

- Main success scenario - high-level user story

- customer creates a ROSA STS or Hypershift cluster (AWS)

- customer wants basic (table-stakes) features such as AWS EFS or RHODS or Logging

- customer sees necessary tasks for preparing for the operator in OperatorHub from their cluster

- customer prepares AWS IAM/STS roles/policies in anticipation of the Operator they want, using what they get from OperatorHub

- customer's provides a very minimal set of parameters (AWS ARN of role(s) with policy) to the Operator's OperatorHub page

- The cluster can automatically setup the Operator, using the provided tokenized credentials and the Operator functions as expected

- Cluster and Operator upgrades are taken into account and automated

- The above steps 1-7 should apply similarly for Google Cloud and Microsoft Azure Cloud, with their respective token-based workload identity systems.

- Alternate flow/scenarios - high-level user stories

- The same as above, but the ROSA CLI would assist with AWS role/policy management

- The same as above, but the oc CLI would assist with cloud role/policy management (per respective cloud provider for the cluster)

- ...

Expected Outcomes

This Section: Articulates and defines the value proposition from a users point of view

- See SDE-1868 as an example of what is needed, including design proposed, for current-day ROSA STS and by extension Hypershift.

- Further research is required to accomodate the AWS STS equivalent systems of GCP and Azure

- Order of priority at this time is

- 1. AWS STS for ROSA and ROSA via HyperShift

- 2. Microsoft Azure for ARO

- 3. Google Cloud for OpenShift Dedicated on GCP

Effect

This Section: Effect is the expected outcome within the market. There are two dimensions of outcomes; growth or retention. This represents part of the “why” statement for a feature.

- Growth is the acquisition of net new usage of the platform. This can be new workloads not previously able to be supported, new markets not previously considered, or new end users not previously served.

- Retention is maintaining and expanding existing use of the platform. This can be more effective use of tools, competitive pressures, and ease of use improvements.

- Both of growth and retention are the effect of this effort.

- Customers have strict requirements around using only token-based cloud credential systems for workloads in their cloud accounts, which include OpenShift clusters in all forms.

- We gain new customers from both those that have waited for token-based auth/auth from OpenShift and from those that are new to OpenShift, with strict requirements around cloud account access

- We retain customers that are going thru both cloud-native and hybrid-cloud journeys that all inevitably see security requirements driving them towards token-based auth/auth.

- Customers have strict requirements around using only token-based cloud credential systems for workloads in their cloud accounts, which include OpenShift clusters in all forms.

References

As an engineer I want the capability to implement CI test cases that run at different intervals, be it daily, weekly so as to ensure downstream operators that are dependent on certain capabilities are not negatively impacted if changes in systems CCO interacts with change behavior.

Acceptance Criteria:

Create a stubbed out e2e test path in CCO and matching e2e calling code in release such that there exists a path to tests that verify working in an AWS STS workflow.

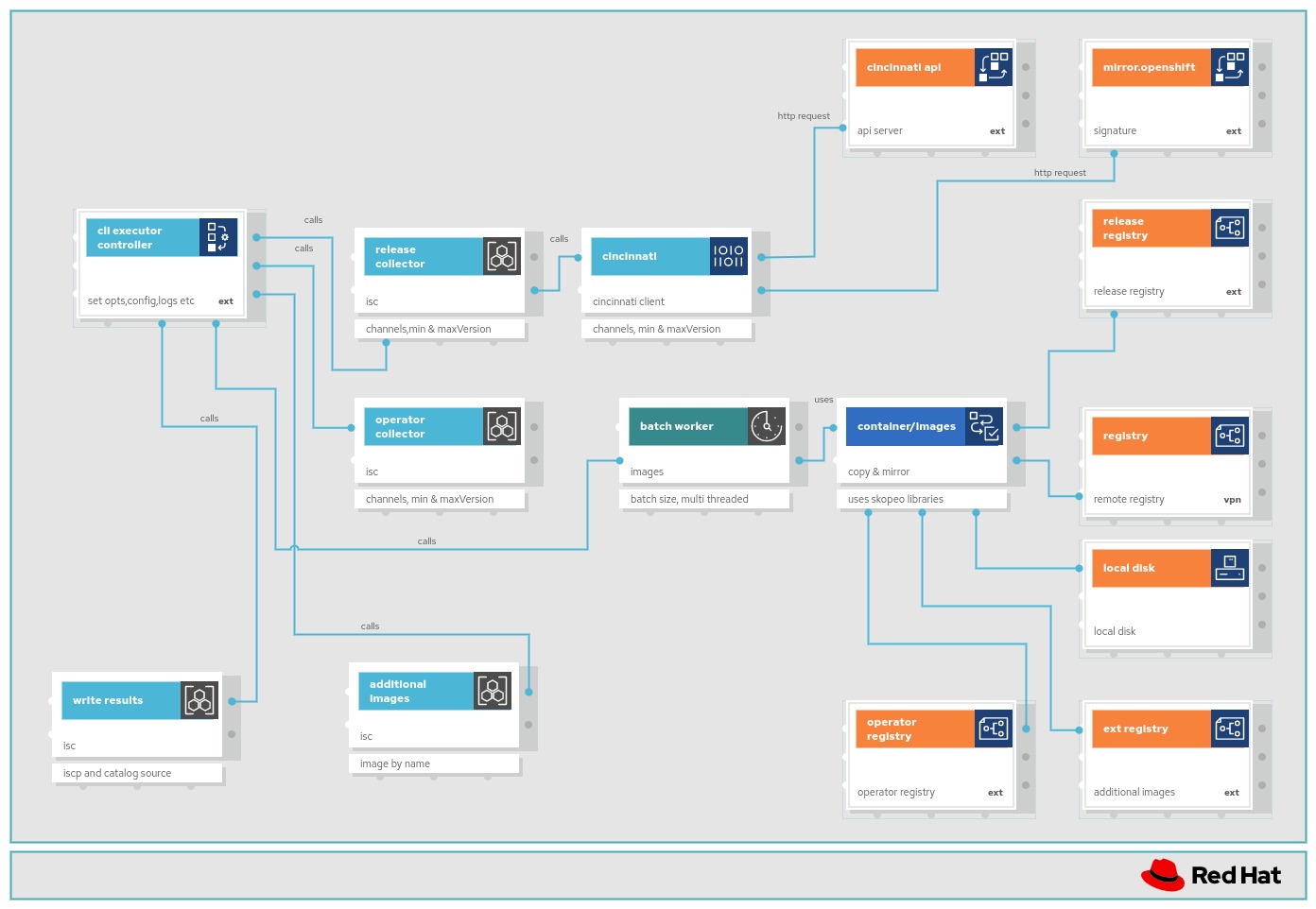

OC mirror is GA product as of Openshift 4.11 .

The goal of this feature is to solve any future customer request for new features or capabilities in OC mirror

In 4.12 release, a new feature was introduced to oc-mirror allowing it to use OCI FBC catalogs as starting point for mirroring operators.

Overview

As a oc-mirror user, I would like the OCI FBC feature to be stable

so that I can use it in a production ready environment

and to make the new feature and all existing features of oc-mirror seamless

Current Status

This feature is ring-fenced in the oc mirror repository, it uses the following flags to achieve this so as not to cause any breaking changes in the current oc-mirror functionality.

- --use-oci-feature

- --oci-feature-action (copy or mirror)

- --oci-registries-config

The OCI FBC (file base container) format has been delivered for Tech Preview in 4.12

Tech Enablement slides can be found here https://docs.google.com/presentation/d/1jossypQureBHGUyD-dezHM4JQoTWPYwiVCM3NlANxn0/edit#slide=id.g175a240206d_0_7

Design doc is in https://docs.google.com/document/d/1-TESqErOjxxWVPCbhQUfnT3XezG2898fEREuhGena5Q/edit#heading=h.r57m6kfc2cwt (also contains latest design discussions around the stories of this epic)

Link to previous working epic https://issues.redhat.com/browse/CFE-538

Contacts for the OCI FBC feature

- Sherine Khoury

- Luigi Mario Zuccarelli

- IBM John Hunkins

- CFE PM Heather Heffner

- WRKLDS PM Tomas Smetana

Feature Overview (aka. Goal Summary)

The OpenShift Assisted Installer is a user-friendly OpenShift installation solution for the various platforms, but focused on bare metal. This very useful functionality should be made available for the IBM zSystem platform.

Goals (aka. expected user outcomes)

Use of the OpenShift Assisted Installer to install OpenShift on an IBM zSystem

Requirements (aka. Acceptance Criteria):

Using the OpenShift Assisted Installer to install OpenShift on an IBM zSystem

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. Initial completion during Refinement status.

Interoperability Considerations

Which other projects and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

As a multi-arch development engineer, I would like to ensure that the Assisted Installer workflow is fully functional and supported for z/VM deployments.

Acceptance Criteria

- Feature is implemented, tested, QE, documented, and technically enabled.

- Stories closed.

Description of the problem:

Using DASD devices are not recognized correctly if attached and used for a zVM node.

<see attached screenshot>

Attach two FCP devices to a zVM node. Create a cluster and boot zVM node into discovery service. Host discovery panel shows an error for discovered host.

Steps to reproduce:

1. Attach two DASD devices to the zVM.

2. Create new cluster using the AI UI and configure discovery image

3. Boot zVM node

4. Waiting until node is showing up on the Host discovery panel.

5. DASD devices are not recognized as valid option

Actual results:

DASD devices can't be used as installable disk

Expected results:

DASD device can be used for installation. User can choose the on which device AI will install to.

Discovering an regression on staging where default is set to minimal ISO preventing installation of OCP 4.13 for s390x architecture.

See following older bugs addressing the same issue I guess

Description of the problem:

Using FCP (multipath) devices for zVM node

parmline:

rd.neednet=1 console=ttysclp0 coreos.live.rootfs_url=http://172.23.236.156:8080/assisted-installer/rootfs.img ip=10.14.6.8::10.14.6.1:255.255.255.0:master-0:encbdd0:none nameserver=10.14.6.1 ip=[fd00::8]::[fd00::1]:64::encbdd0:none nameserver=[fd00::1] zfcp.allow_lun_scan=0 rd.znet=qeth,0.0.bdd0,0.0.bdd1,0.0.bdd2,layer2=1 rd.zfcp=0.0.8007,0x500507630400d1e3,0x4000401e00000000 rd.zfcp=0.0.8107,0x50050763040851e3,0x4000401e00000000 random.trust_cpu=on rd.luks.options=discard ignition.firstboot ignition.platform.id=metal console=tty1 console=ttyS1,115200n8

shows disk limitation error in the UI.

<see attached image>

How reproducible:

Attach two FCP devices to a zVM node. Create a cluster and boot zVM node into discovery service. Host discovery panel shows an error for discovered host.

Steps to reproduce:

1. Attach two FCP devices to the zVM.

2. Create new cluster using the AI UI and configure discovery image

3. Boot zVM node

4. Waiting until node is showing up on the Host discovery panel.

5. FCP devices are not recognized as valid option

Actual results:

FCP devices can't be used as installable disk

Expected results:

FCP device can be used for installation (multipath must be activated after installation:

https://docs.openshift.com/container-platform/4.13/post_installation_configuration/ibmz-post-install.html#enabling-multipathing-fcp-luns_post-install-configure-additional-devices-ibmz)

Feature Overview (aka. Goal Summary)

Due to low customer interest of using Openshift on Alibaba cloud we have decided to deprecate then remove the IPI support for ALibaba Cloud

Goals (aka. expected user outcomes)

4.14

Announcement

- Update cloud.redhat.com with deprecation information

- Update IPI installer code with warning

- Update release node with deprecation information

- Update Openshift Doc with deprecation information

4.15

Archive code

Add a warning of depreciation in installer code for anyone trying to install Alibaba via IPI

The deprecation of support for the Alibaba Cloud platform is being postponed by one release, so we need to revert SPLAT-1094.

{}USER STORY:{}

As an user of the installer binary, I want to be warned that Alibaba support will be deprecated in 4.15, so that I'm prevented from creating clusters that will soon be unsupported.

{}DESCRIPTION:{}

Alibaba support will be decommissioned from both IPI and UPI starting in 4.15. We want to warn users of the 4.14 installer binary picking 'alibabacloud' in the list of providers.

{}ACCEPTANCE CRITERIA:{}

Warning message is displayed after choosing 'alibabacloud'.

{}ENGINEERING DETAILS:{}

Epic Goal

As an OpenShift infrastructure owner I need to deploy OCP on OpenStack with the installer-provisioned infrastructure workflow and configure my own load balancers

Why is this important?

Customers want to use their own load balancers and IPI comes with built-in LBs based in keepalived and haproxy.

Scenarios

- A large deployment routed across multiple failure domains without stretched L2 networks, would require to dynamically route the control plane VIP traffic through load-balancers capable of living in multiple L2.

- Customers who want to use their existing LB appliances for the control plane.

Acceptance Criteria

- Should we require the support of migration from internal to external LB?

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- QE - must be testing a scenario where we disable the internal LB and setup an external LB and OCP deployment is running fine.

- Documentation - we need to document all the gotchas regarding this type of deployment, even the specifics about the load-balancer itself (routing policy, dynamic routing, etc)

Dependencies (internal and external)

- Fixed IPs would be very interesting to support, already WIP by vsphere (need to Spike on this): https://issues.redhat.com/browse/OCPBU-179

- Confirm with customers that they are ok with external LB or they prefer a new internal LB that supports BGP

Previous Work:

vsphere has done the work already via https://issues.redhat.com/browse/SPLAT-409

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Epic Goal

As an OpenShift infrastructure owner I need to deploy OCP on OpenStack with the installer-provisioned infrastructure workflow and configure my own load balancers

Why is this important?

Customers want to use their own load balancers and IPI comes with built-in LBs based in keepalived and haproxy.

Scenarios

- A large deployment routed across multiple failure domains without stretched L2 networks, would require to dynamically route the control plane VIP traffic through load-balancers capable of living in multiple L2.

- Customers who want to use their existing LB appliances for the control plane.

Acceptance Criteria

- Should we require the support of migration from internal to external LB?

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- QE - must be testing a scenario where we disable the internal LB and setup an external LB and OCP deployment is running fine.

- Documentation - we need to document all the gotchas regarding this type of deployment, even the specifics about the load-balancer itself (routing policy, dynamic routing, etc)

Dependencies (internal and external)

- Fixed IPs would be very interesting to support, already WIP by vsphere (need to Spike on this): https://issues.redhat.com/browse/OCPBU-179

- Confirm with customers that they are ok with external LB or they prefer a new internal LB that supports BGP

Previous Work:

vsphere has done the work already via https://issues.redhat.com/browse/SPLAT-409

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Notes: https://github.com/EmilienM/ansible-role-routed-lb is an example of a LB that will be used for CI, can be used by QE and customers.

Epic Goal

As an OpenShift infrastructure owner I need to deploy OCP on OpenStack with the installer-provisioned infrastructure workflow and configure my own load balancers

Why is this important?

Customers want to use their own load balancers and IPI comes with built-in LBs based in keepalived and haproxy.

Scenarios

- A large deployment routed across multiple failure domains without stretched L2 networks, would require to dynamically route the control plane VIP traffic through load-balancers capable of living in multiple L2.

- Customers who want to use their existing LB appliances for the control plane.

Acceptance Criteria

- Should we require the support of migration from internal to external LB?

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- QE - must be testing a scenario where we disable the internal LB and setup an external LB and OCP deployment is running fine.

- Documentation - we need to document all the gotchas regarding this type of deployment, even the specifics about the load-balancer itself (routing policy, dynamic routing, etc)

Dependencies (internal and external)

- Fixed IPs would be very interesting to support, already WIP by vsphere (need to Spike on this): https://issues.redhat.com/browse/OCPBU-179

- Confirm with customers that they are ok with external LB or they prefer a new internal LB that supports BGP

Previous Work:

vsphere has done the work already via https://issues.redhat.com/browse/SPLAT-409

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Feature Overview

- Support OpenShift to be deployed on AWS Local Zones

Goals

- Support OpenShift to be deployed from day-0 on AWS Local Zones

- Support an existing OpenShift cluster to deploy compute Nodes on AWS Local Zones (day-2)

AWS Local Zones support - feature delivered in phases:

- Phase 0 (

OCPPLAN-9630): Document how to create compute nodes on AWS Local Zones in day-0 (SPLAT-635) - Phase 1 (

OCPBU-2): Create edge compute pool to generate MachineSets for node with NoSchedule taints when installing a cluster in existing VPC with AWS Local Zone subnets (SPLAT-636) - Phase 2 (

OCPBU-351): Installer automates network resources creation on Local Zone based on the edge compute pool (SPLAT-657)

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

|---|---|---|

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

<!--

Please make sure to fill all story details here with enough information so

that it can be properly sized and is immediately actionable. Our Definition

of Ready for user stories is detailed in the link below:

https://docs.google.com/document/d/1Ps9hWl6ymuLOAhX_-usLmZIP4pQ8PWO15tMksh0Lb_A/

As much as possible, make sure this story represents a small chunk of work

that could be delivered within a sprint. If not, consider the possibility

of splitting it or turning it into an epic with smaller related stories.

Before submitting it, please make sure to remove all comments like this one.

-->

{}USER STORY:{}

<!--

One sentence describing this story from an end-user perspective.

-->

As a [type of user], I want [an action] so that [a benefit/a value].

{}DESCRIPTION:{}

<!--

Provide as many details as possible, so that any team member can pick it up

and start to work on it immediately without having to reach out to you.

-->

{}Required:{}

...

{}Nice to have:{}

...

{}ACCEPTANCE CRITERIA:{}

<!--

Describe the goals that need to be achieved so that this story can be

considered complete. Note this will also help QE to write their acceptance

tests.

-->

{}ENGINEERING DETAILS:{}

<!--

Any additional information that might be useful for engineers: related

repositories or pull requests, related email threads, GitHub issues or

other online discussions, how to set up any required accounts and/or

environments if applicable, and so on.

-->

Feature Overview

Testing is one of the main pillars of production-grade software. It helps validate and flag issues early on before the code is shipped into productive landscapes. Code changes no matter how small they are might lead to bugs and outages, the best way to validate bugs is to write proper tests, and to run those tests we need to have a foundation for a test infrastructure, finally, to close the circle, automation of these tests and their corresponding build help reduce errors and save a lot of time.

Goal(s)

- How do we get infrastructure, what infrastructure accounts are required?

- Build e2e integration with openshift-release on AWS.

- Define MVP CI Jobs to validate (e.g., conformance). What tests are failing, are we skipping any? why?

Note: Sync with the Developer productivity teams might be required to understand infra requirements especially for our first HyperShift infrastructure backend, AWS.

Context:

This is a placeholder epic to capture all the e2e scenarios that we want to test in CI in the long term. Anything which is a TODO here should at minimum be validated by QE as it is developed.

DoD:

Every supported scenario is e2e CI tested.

Scenarios:

- Hypershift deployment with services as routes.

- Hypershift deployment with services as NodePorts.

DoD:

Refactor the E2E tests following new pattern with 1 HostedCluster and targeted NodePools:

- nodepool_upgrade_test.go

Goal

Productize agent-installer-utils container from https://github.com/openshift/agent-installer-utils

Feature Description

In order to ship the network reconfiguration it would be useful to move the agent-tui to its own image instead of sharing the agent-installer-node-agent one.

Goal